Auto scaling in Azure Container Service (AKS) is crucial for optimizing resource utilization and ensuring your applications can handle fluctuating demand. This allows you to automatically adjust the number of container instances based on real-time metrics like CPU usage, memory consumption, and request throughput. By leveraging auto scaling, you can avoid over-provisioning and save costs while maintaining high availability and performance.

Understanding the Benefits of Auto Scaling in AKS

Auto scaling brings numerous advantages to your AKS deployments. It ensures your applications are always running with the optimal number of pods, scaling up during peak times and scaling down during periods of low activity. This leads to significant cost savings by avoiding unnecessary resource allocation. Furthermore, auto scaling enhances application availability and resilience by automatically compensating for failed pods or unexpected traffic spikes.

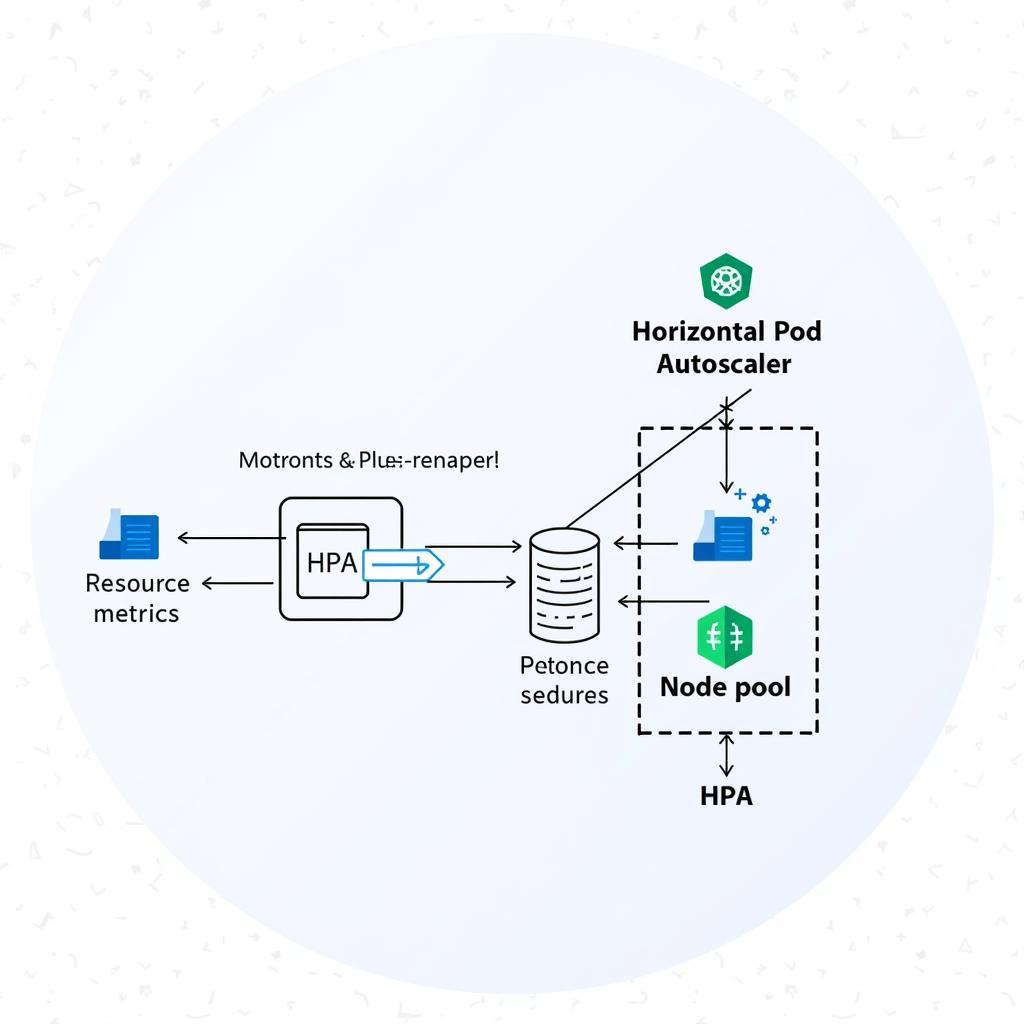

How Auto Scaling Works in AKS

AKS auto scaling utilizes the Horizontal Pod Autoscaler (HPA), a Kubernetes feature that automatically scales the number of pods in a deployment, replica set, or stateful set based on observed metrics. The HPA monitors resource metrics collected by the metrics server and adjusts the number of pods accordingly. You define scaling rules and thresholds that trigger the HPA to scale up or down. For instance, you can configure the HPA to scale up when average CPU utilization across all pods exceeds 70% and scale down when it falls below 50%.

Auto Scaling Architecture in AKS

Auto Scaling Architecture in AKS

Setting Up Auto Scaling in AKS

Configuring auto scaling in AKS involves defining the HPA resource in your Kubernetes YAML configuration. You specify the target deployment or replica set, the metrics to monitor (CPU, memory, custom metrics), and the desired scaling thresholds. You can also define minimum and maximum replica counts to prevent excessive scaling or resource starvation. The kubectl autoscale command simplifies the process of creating and managing HPAs.

Best Practices for Auto Scaling in AKS

Implementing effective auto scaling requires careful consideration of various factors. Choose appropriate metrics that accurately reflect your application’s performance requirements. Set realistic scaling thresholds to avoid frequent scaling events and ensure stable operation. Monitor auto scaling behavior and adjust configurations as needed based on real-world traffic patterns.

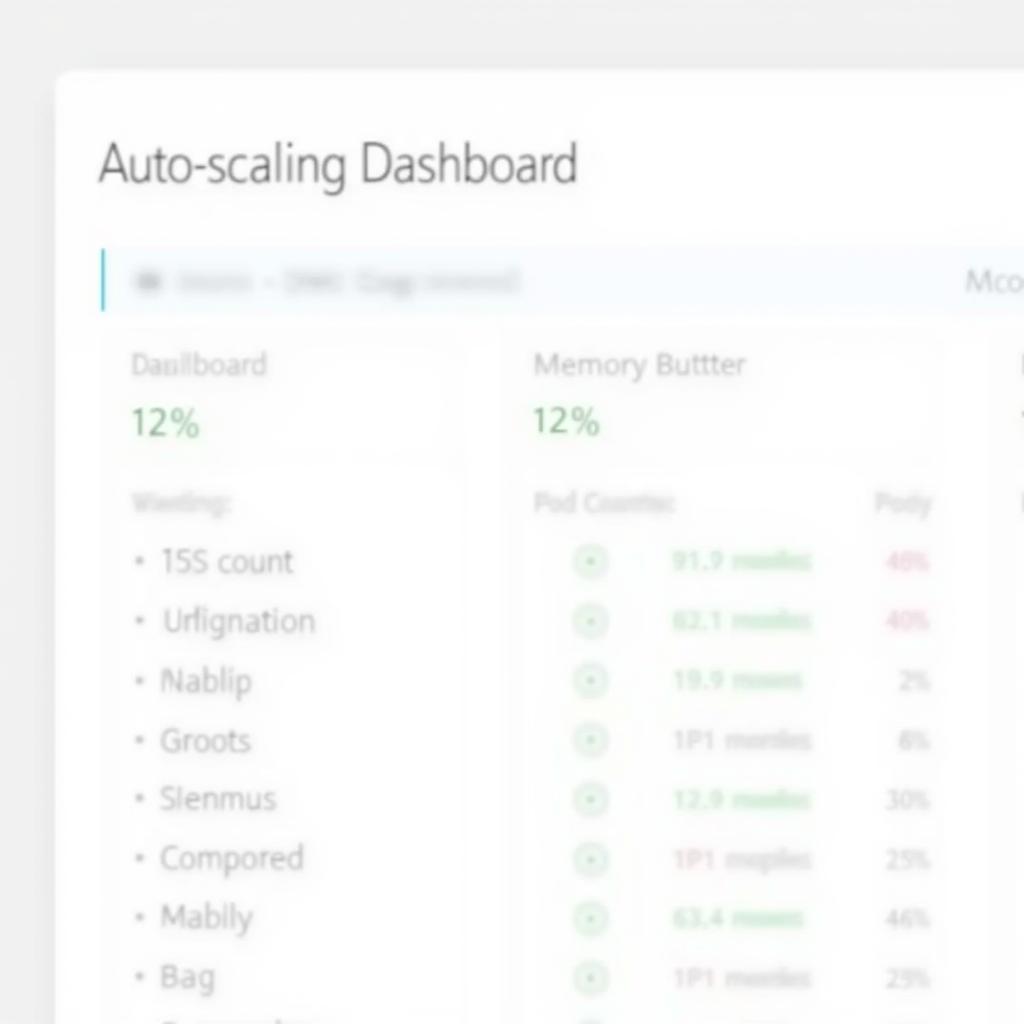

Monitoring Metrics for AKS Auto Scaling

Monitoring Metrics for AKS Auto Scaling

Common Auto Scaling Scenarios in AKS

Auto scaling addresses a wide range of scenarios in AKS. For web applications, auto scaling handles traffic spikes during peak hours or promotional campaigns. For batch processing jobs, it scales up worker pods to accelerate processing and then scales down when the job completes. Auto scaling also ensures high availability for critical services by automatically replacing failed pods.

Troubleshooting Auto Scaling Issues in AKS

While auto scaling simplifies resource management, it can sometimes encounter issues. Common problems include incorrect scaling thresholds, insufficient resources in the node pool, and conflicting scaling policies. Troubleshooting involves analyzing HPA logs, monitoring resource utilization, and verifying scaling configurations.

Advanced Auto Scaling Techniques in AKS

For more complex scenarios, you can utilize advanced auto scaling techniques like custom metrics and predictive scaling. Custom metrics allow you to scale based on application-specific metrics, such as the number of active users or messages in a queue. Predictive scaling anticipates future demand based on historical data and proactively scales resources to avoid performance bottlenecks.

Advanced Auto Scaling Configurations in AKS

Advanced Auto Scaling Configurations in AKS

In conclusion, auto scaling in Azure Container Service (AKS) is a powerful tool for optimizing resource utilization, ensuring application availability, and reducing costs. By understanding its benefits, implementation, and best practices, you can effectively leverage auto scaling to manage your containerized applications and meet evolving demands.

FAQ

- What is the difference between vertical and horizontal pod autoscaling?

- How do I choose the right metrics for auto scaling?

- What are the limitations of auto scaling in AKS?

- Can I use custom metrics for auto scaling?

- How do I monitor the performance of auto scaling?

- What are the best practices for setting scaling thresholds?

- How can I troubleshoot auto scaling issues?

If you need further assistance, please contact us via WhatsApp: +1(641)206-8880, Email: [email protected] or visit our office at 321 Birch Drive, Seattle, WA 98101, USA. We have a 24/7 customer support team available to help.